One of my first exposures to algorithms that made automatic adjustments was related to travel & transportation. Over three decades ago, deregulation drove airlines to introduce software in the availability and pricing of their inventory to maximize profit. The applications were ‘yield management’, and many of the brightest and best developers introduced algorithms to automatically adjust buckets of inventory depending on any number of dynamic variables.

These algorithms continue to provide value for organizations, and the massive growth in compute power and storage capacity has expanded the scope of Artificial Intelligence (AI). The ability to capture every click on the internet has created an ‘intelligence’ that we all benefit from and may even be concerned about sometimes.

Just as Pooh learned (or should have learned), all good things need to be enjoyed in moderation. We will find ourselves sick or stuck if we don’t keep things in perspective when deploying the latest solution to ‘smarten up’ our technology. Therefore, when your IT journey includes a great Machine Learning (ML), AI, or Big Data promise and the first sample tastes sweet, make sure:

- the runtime costs of data collection, Machine Learning, analysis, and reporting of the solution(s) are included in your promising new data analytics budget.

- you incorporate some blind spot protection in the IT project with a trusted independent provider so that you are seeing those costs.

- you have considered the conflict of interests that may exist. For example, a hardware vendor that sells software to help you stay efficient.

Solution providers will continue to entice us with bigger and better real-time analytics. Some of these should be employed, but first try to understand the logic you may be activating when you implement.

Be Cautious When Implementing AI Solutions

Based on our experience working with customers, we have some advice when implementing AI solutions. Ask the vendor about the potential impact of enabling features that are described in the following manner:

- How much data does the Machine Learning need to determine what is normal over time?

- How does Machine Learning calculate what is normal for metrics?

- What is the overhead to collect and evaluate the data?

- Does the overhead show up in the real-time monitor or the application/address space? If so, how can we separate the cost from the application itself?

If they cannot provide you concrete answers, then we recommend you deploy these new features in a test LPAR with limited resources to contain impacts away from your production environment.

While these types of features undoubtedly provide some benefits, the machine learning calculations combined with real-time data collection may compound the processor demand beyond the value or efficiencies sought. Increasing your overall MIPS consumption might be a costly outcome, but it could also drive unnecessary latency and delay critical path work or drive other stability issues.

‘Real Time Machine Learning’ Maxes Out Processor

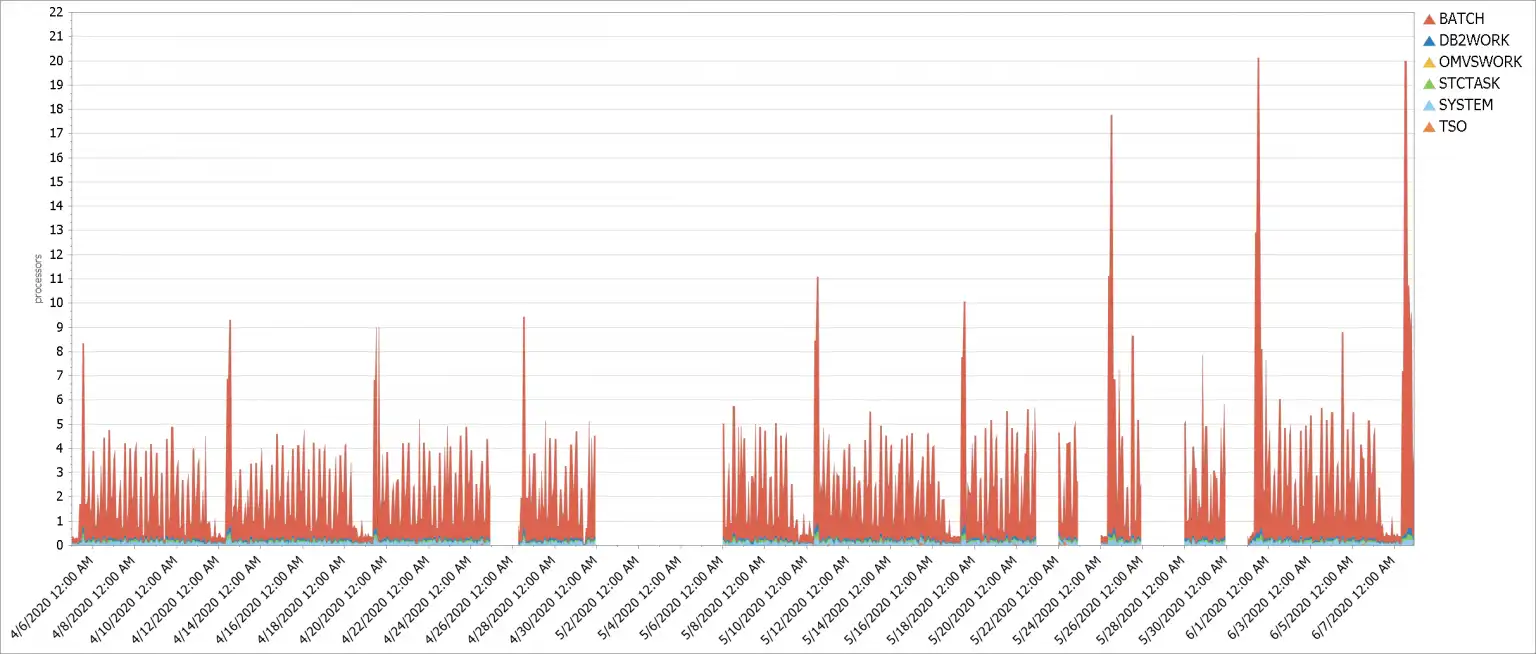

Figure 1: Processor Demand Workload for System Containing “Machine Learning”

The processor demand workload chart above for one system contained ‘Machine Learning’ for metrics that included high frequency metrics (Db2 getpages) at SQL granularity. It was activated by a real-time monitor. The spike in overall demand is an underlying batch workload driving heavy Db2 activity.

The processor was maxed out during the last two spikes, but the increase in the demand for the prior intervals leading up to the last two is visible.

Regardless of the technology, it’s always important to weigh the costs vs. the benefits. In this case, the user may be asking themselves how much knowledge did the ‘magic’ of real-time Machine Learning buy them? In this case, the run-time cost was nearly 9 CPs of workload for the batch processing. On today’s current processors, this easily represents more than 10,000 MIPS! Fortunately, the spikes were relatively short lived.

Db2 Package Total Usage And Wait for Authid “Bee”

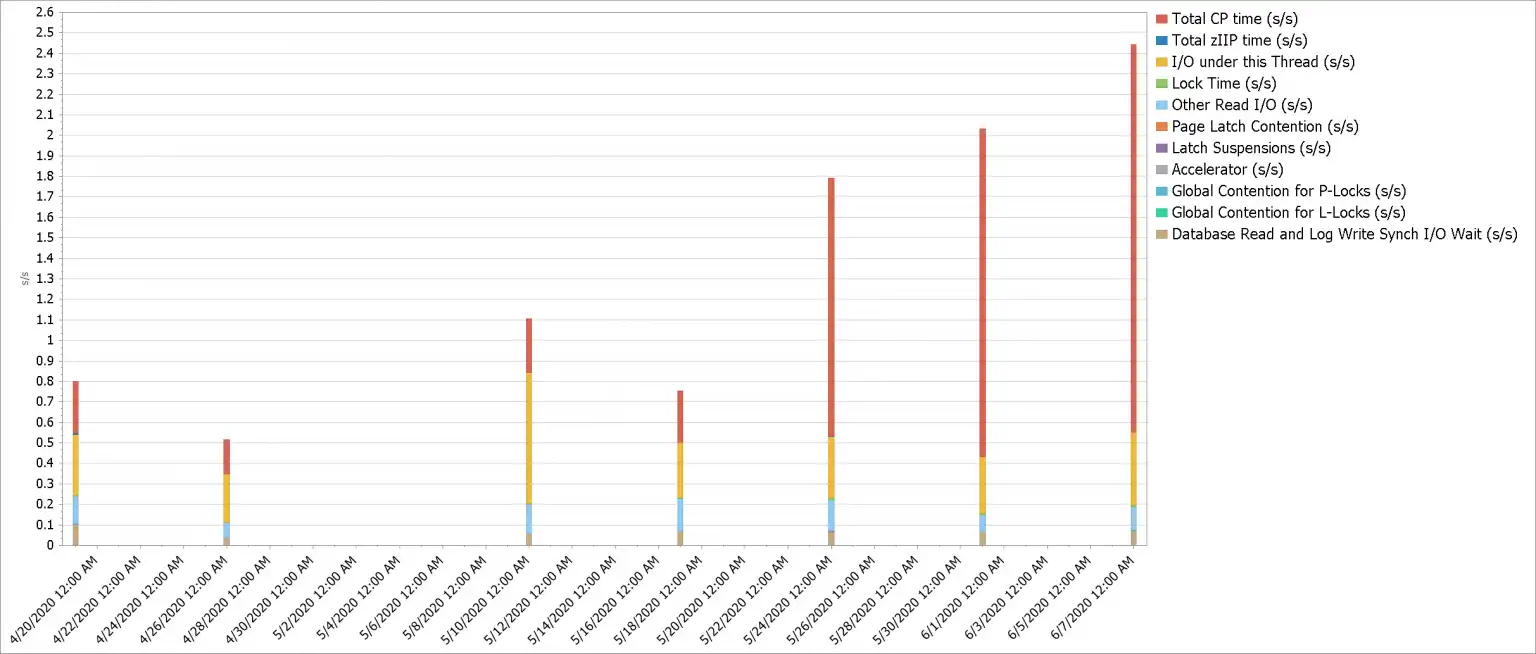

Figure 2: Db2 Package Total Usage And Wait for Authid “Bee”

The Db2 Package Total usage/wait report above details some of the Db2 time into CP time, zIIP time, I/O time, etc. The CPU time for this workload shows a similar dramatic increase with all other work remaining within some variation of the workload. The total CP time grew from less than a 1/3 of a second to nearly 2 seconds of CPU time.

Fortunately, the issue was resolved by shutting down these new AI features and the solution provider was contacted regarding the heavy impact.

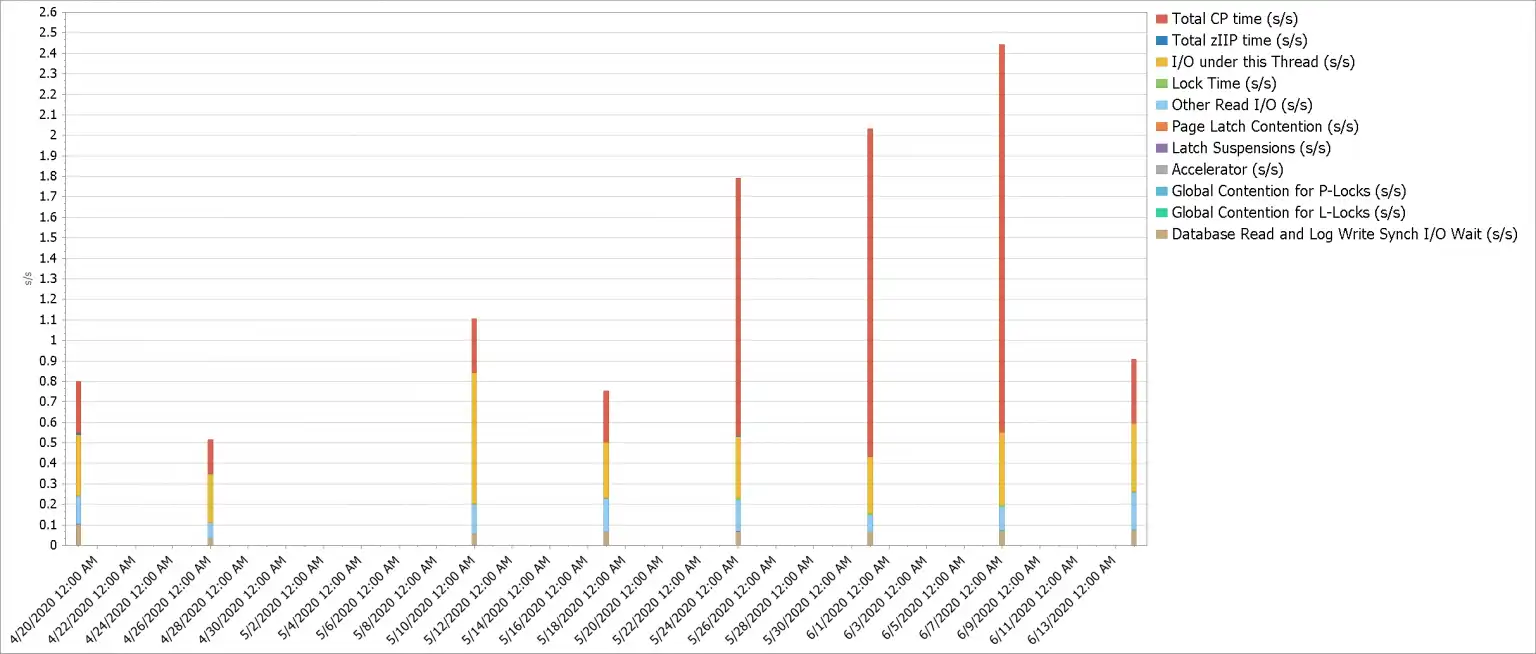

One Week Later-after shutting AI features disabled

Figure 3: DB2 type of processing or wait by time consumed for Authid “Bee”

Figure 3 provides the same view with one additional week in the report confirming that assessment.

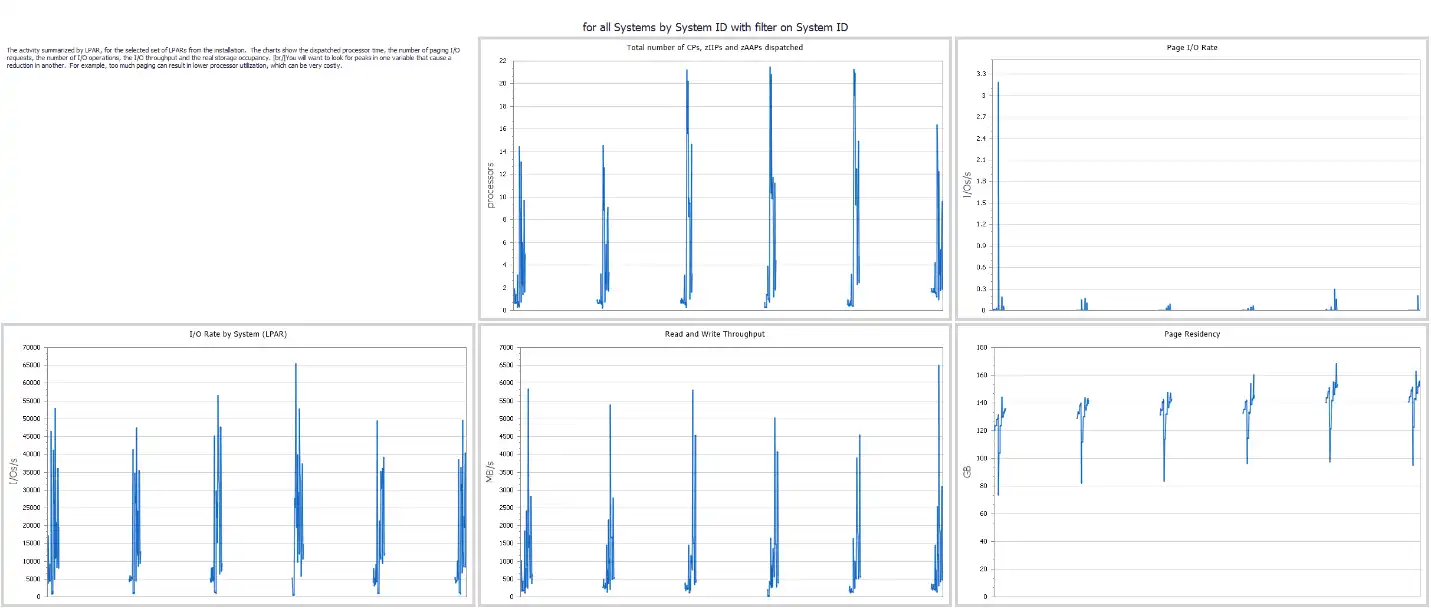

Figure 4: Processor, Paging, and IO Activity by LPAR

A high-level multiple metric view provides some support for the earlier statement that the CPU increases were not driven by additional business activity. The evidence of higher CPU is present while other drivers (I/O, paging, etc.) are not elevated. In fact, the Read/Write Throughput on the system increased while the CPU demand spikes dropped back to more normal levels several weeks prior.

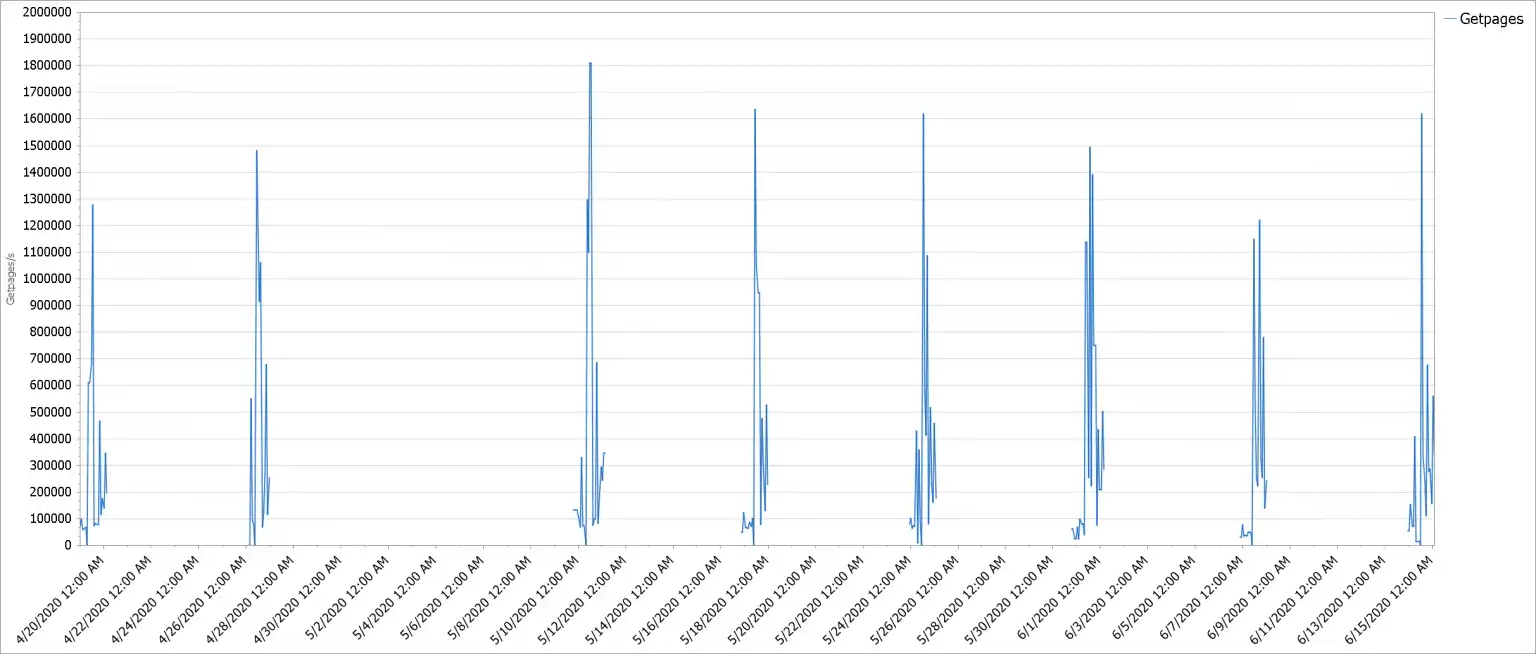

Figure 5: Db2 Getpages

A quick look into getpages for the Db2 system in question also supports this conclusion.

Weigh the Promise of AI with the Costs

There is great promise with the continuing development of Artificial Intelligence and Machine Learning. Applying the relatively inexpensive storage and compute power available to assess and evaluate data faster and more comprehensively than ever before is something we encourage and are continuing to incorporate into our IntelliMagic solutions. Just remember that even though machines are very fast, the run-time costs of solutions should never double the run-time workload demands of your system.

Just remember that even though machines are very fast, the run-time costs of solutions should never double the run-time workload demands of your system.

The yield management routines that still help the airlines with their inventory and pricing are ‘in-line’ and operating every time folks start shopping for travel. We are blessed to have so many ‘robotic’ assistants helping us lead more productive lives. These algorithms do have a run-time cost, but they are efficient and have been hardened so that the main objectives of the consumer can be met without incurring an observable impact.

Careful examination of your implementation and feature options can prevent costly mishaps and failures on your AI journey. We would welcome a discussion with your teams to help examine your current solution deployments, and partner with your organizations to incorporate smart solutions that will provide timely, meaningful, and powerful analysis of your IT infrastructure.

This article's author

Share this blog

Navigating the New z/OS Cost, Performance, and Availability Landscape

Mainframe Hall of Famers and business partners, Dr. Gilbert Houtekamer and Cheryl Watson join Enterprise Executive in this cover story to discuss the changing landscape for the z/OS performance industry.

Related

A Mainframe Roundtable: The Leaders | IntelliMagic zAcademy

Join industry leaders as they discuss the evolution of mainframes, sharing personal experiences, challenges, and future insights, along with practical advice from their careers in the Z ecosystem.

Challenging the Skills Gap – The Next Generation Mainframers | IntelliMagic zAcademy

Hear from these young mainframe professionals on why they chose this career path and why they reject the notion that mainframes are obsolete.

New to z/OS Performance? 10 Ways to Help Maintain Performance and Cost | IntelliMagic zAcademy

This webinar will delve into strategies for managing z/OS performance and costs. You'll gain insights into key metrics, learn how to identify bottlenecks, and discover tips for reducing costs.

Book a Demo or Connect With an Expert

Discuss your technical or sales-related questions with our mainframe experts today

Jack Opgenorth

Jack Opgenorth