I have lived in the same metropolitan area for my whole life. I have never had any compulsion to leave my beloved Colorado. And as such, I have had the opportunity to observe the successes and failures of the city planners as the Denver area has grown and continues to grow throughout several decades – as has the growth of z/OS.

I would like you to envision a portion of z/OS like the roadway system of a growing city. As people have moved into our area, the roadway infrastructure has had to be changed to accommodate. I am sure that the city planners a century ago thought that their design would last a very long time, and they were right to a certain extent. It would be ridiculous to assume that the roadways would remain exactly the same several decades later.

Some roads that were considered “minor” in the beginning have had to be changed as demand changes. Two lane roads are now four or six, traffic lights have to be installed, road repairs are common, etc. On the other hand, I still see some things on our roadways that have not been changed in years and wonder why they have never been addressed.

Workload Manager (WLM) Goal Mode is that portion of z/OS that I liken to roadway infrastructure. For many shops, the WLM service policy was established a long time ago. For most, several WLM elements and options have been changed, but several key elements and options of the service policy still remain unchanged.

In particular, this blog addresses one WLM option called, “I/O Priority Management.” For a more in-depth understanding and managing Workload Manager, view the IBM resource center and our white paper on the topic, “Managing z/OS Workload Manager (WLM) Goals and Performance.”

History of CPU Resource Management: MVS and WLM Goal Mode

Before the late 1990’s, MVS (z/OS’ older sibling) managed CPU resources using performance groups with “compatibility mode.” In a very simplified description, the performance groups were defined “top down” so that the important work running in MVS received CPU resources first, and then the next performance group received some if there was some leftover. This was done by defining static dispatching priorities. It was easy to conceptualize CPU distribution as a top (important) to bottom (least important) method.

WLM Goal Mode was first announced in 1994, and shops switched to the newer way of CPU distribution thinking over the next decade. The people responsible for maintaining the compatibility mode definitions were usually also responsible for creating the new WLM service policies. So the CPU top-down distribution methodology was ingrained in MVS performance thinking and was easy to translate into velocity goals as the primary service class goal.

Prevalence of Velocity Goals in Period 1 WLM Service Classes

I took a look at several customers’ WLM service policies and examined period 1 goals. Excluding the System goals, I made note of the percentage of service policies that have velocity goals. Most service policies define over 70% of their period 1 service class goals as velocity goals. Only 1 service policy had an even 50% of their period 1 service class goals as velocity goals. Of all the period 1 service class goals I examined, more than 75% had velocity goals.

Half of the service policies that I analyzed still use a “top down” approach, which is similar to the compatibility mode way of thinking. What I consider “top down” is when high velocity goals are high in the WLM service policy (importance’s 1 and 2).

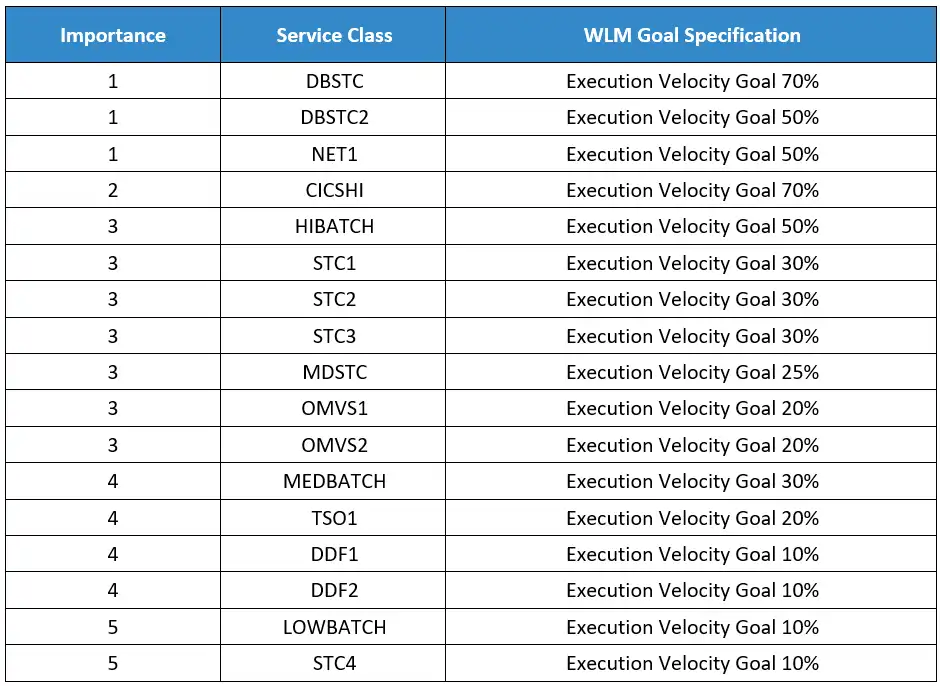

Here is an example of a top-down service policy:

You can see how the higher velocity goals are intended to perform better even during times of resource constraint. This methodology is very similar to the old compatibility mode methodology.

For many of the service policies that I examined, the high velocity goals were generally importance 1 and 2 service classes.

It is my assumption that when I see a top-down service policy with mostly velocity goals, that the people responsible for z/OS performance are thinking about how much CPU resources their service classes are receiving, and not thinking about how much I/O resources their service classes are receiving.

WLM I/O Priority Management

Within every WLM service policy is an option to tell WLM to use I/O Priority Management or not. This option is located in the “Service Coefficient/Service Definition Options.”

If turned on, then the DASD Using and Delay samples are used within WLM to calculate the velocity.

Based on what we have observed at IntelliMagic, leaving this option as “no” is best;

The reason for this recommendation is that the velocities that are calculated using DASD Using and Delay samples are usually a surprise and can result in unexpected workload management.

I/O Priority Management Example Scenarios

The following are two examples that illustrate the issue. For both examples, I/O Priority Management is turned on.

Example 1:

Importance 1 service class STCA has a velocity goal of 70. The achieved velocity reported by RMF is 91.1.

Using the WLM samples (Using and Delay), the velocity calculated by excluding DASD samples is 30.2 (nowhere near 91.1). If the velocity goal of 70 was written with the understanding that the I/O samples are included and would inflate the overall velocity, then everything would be fine. However, my guess is that the WLM service policy was written with the understanding that CPU was a much bigger part of the velocity calculation.

Turning off I/O Priority Management would result in an achieved velocity of 30.2, and WLM would look for donor service classes for CPU, which is probably what the Architect of the service policy intended.

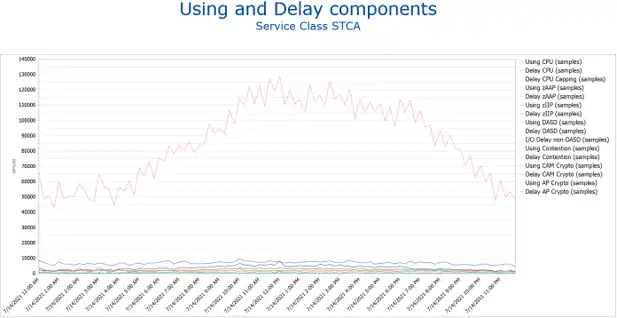

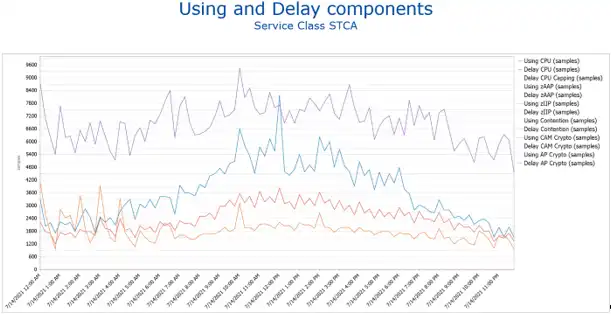

Figures 1 and 2 below illustrate the Using and Delay samples for the service class above across a much longer time period. In the first figure, you can see how DASD samples dominate the service class. Once those samples are removed by turning off I/O priority management, the samples that are normally considered during WLM planning are seen much better.

Example 2:

Importance 4 service class STCB has a velocity goal of 50. The achieved velocity reported by RMF is 93.1.

Using the WLM samples (Using and Delay), the velocity calculated by excluding DASD samples is 41.1. If the velocity goal of 50 was written with the understanding that the I/O samples are included and would inflate the overall velocity, then everything would be fine. WLM would look at this service class as a potential donor (lower importance/overachieving service class).

Turning off I/O Priority Management would result in an achieved velocity of 41.1, and WLM would not look at this service class as a donor for CPU.

Understanding When to Turn I/O Priority Management On or Off

This blog entry has given you the knowledge that you need to make informed decisions about the I/O Priority Management option. If you are concerned about how I/O is prioritized with this option turned off, rest assured that the I/O priority will still follow the assigned dispatch priority.

Regardless of which option you are using or choose to use, the Using and Delay samples can be obtained for any service class period. Analyzing your Using and Delay samples will allow you to understand how WLM is calculating your velocity and help you decide the best option for you.

Revisiting this one option of your WLM service policy will help keep your z/OS roadway running smooth and as expected. Feel free to reach out to us at IntelliMagic if you would like assistance or further explanation.

Managing z/OS Workload Manager (WLM) Goals and Performance

This whitepaper covers how utilizing a modernized approach using artificial intelligence techniques helps you to manage z/OS Workload Manager goals and performance and ensure that your Service Levels are being met.

This article's author

Share this blog

You May Also Be Interested In:

What's New with IntelliMagic Vision for z/OS? 2024.1

January 29, 2024 | This month we've introduced updates to the Subsystem Topology Viewer, new Long-term MSU/MIPS Reporting, updates to ZPARM settings and Average Line Configurations, as well as updates to TCP/IP Communications reports.

Top 10 IntelliMagic Vision Features Released in 2023

With over 160 announced product updates, 2023 was a spectacular year of releases for users of IntelliMagic Vision for z/OS. In this blog we try to narrow it down to the 10 most popular, helpful, and groundbreaking.

Troubleshooting WLM Missed Goals with CICS Transaction Data

Combined CICS and z/OS Systems visibility enables easy troubleshooting and root cause analysis of missed WLM goals resulting from CICS transaction data.

Book a Demo or Connect With an Expert

Discuss your technical or sales-related questions with our mainframe experts today

Jerry Street

Jerry Street