The cloud is the ultimate in infrastructure commoditization, reducing costs to their bare minimum and having end users pay for what they use. CIO’s and Directors are asking for workloads to move to the cloud primarily for cost savings reasons.

Most organizations have private clouds, and some have moved workloads into public clouds. For the purpose of this conversation, I will focus on the public cloud. According to this TechTarget article, “A public cloud is one based on the standard cloud computing model, in which a service provider makes resources, such as applications and storage, available to the general public over the internet. Public cloud services may be free or offered on a pay-per-usage model.”

The cloud provides an economic model for computing that may work well for some workloads, so the trick is to figure out which ones are a good fit.

Workloads fit for the cloud

Not all workloads are a good fit for the cloud. Let’s start by talking about platforms. The cloud is an infrastructure computing platform based on open systems standards. Typical Enterprise z/OS workloads (CICS, DB2, IMS) are not good candidates for public cloud simply due to their operating system environment. There are some exceptions to this rule:

- Workloads that run on z/Linux: Often these are web and web application servers. These may or may not be good candidates for the cloud but are at least platform ready.

- Workloads that have SAAS alternatives and are non-critical: Analyzing mainframe RMF/SMF data is a good example of data that can be moved to the cloud. It is resource intensive making it expensive to operate on the z/OS platform and, though extremely important to the availability of the system (if used correctly), it is not directly tied to end user transactions.

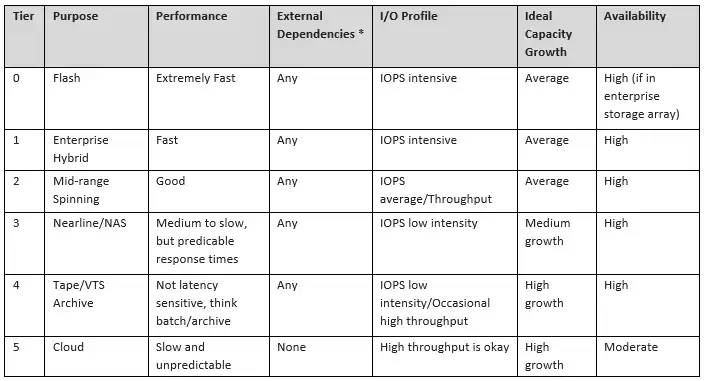

So let’s assume for a moment that you have identified potential workloads that can be migrated to the cloud based on platform compatibility. The next step is to think about the workloads in terms of storage tiers. The cloud, though there are various options, should be considered as another tier. If you have not already created your own internal tiers you should start by considering tiers in terms of performance, I/O profile, availability, external dependencies and capacity growth.

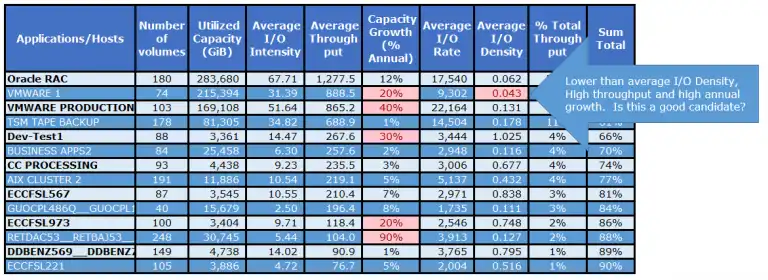

When identifying cloud workloads with cost as the driver and assuming that cloud capacity is cheap, look for:

- High capacity growth workloads: Look for workloads that have a lot of capacity and grow at a rate twice the average data growth rate. If you have workloads that are using a lot of capacity, then your cost savings will be higher, as capacity in the cloud tends to be cheaper than private infrastructure capacity.

- Throughput intensive applications: Look for the top applications in terms of throughput. Often these are development workloads, reporting workloads or analytics workloads that are not necessarily I/O response time sensitive but do consume a significant portion of the computing resources.

- Low I/O density workloads: Looking at the workloads that have a low I/O density. I/O density is a measure of how much IOPS occur per GB of capacity. Gather baseline of I/O density across all workloads and use .5 x baseline. Start with the Bottom 10 and work your way up in terms of I/O density for each environment that is being evaluated. These are often the same workloads that you uncovered in your capacity or throughput assessments. If these workloads meet the following criteria then they may be good candidates for the cloud:

- Platform support

- Not sensitive to end-user response time

- No on-premise dependencies

Now that you have a candidate list of workloads for cloud they need to be vetted further. The criteria for demoting workloads to the cloud should be developed by a cross disciplinary team including business unit stakeholders and IT security and compliance engineers.

Assess the Business Requirements/Risk

These are just some thoughts based on experience working through the process of dealing with security teams in our own SaaS offering and is not intended to cover the entire gamut of possibilities.

- Do these workloads contain Personally Identifiable Information (PII) data?

- Does the data contain business critical / domain expertise or anything that would seriously hurt your business if your competition got a hold of it?

- Does the candidate data have rules that specify location of the data?

There are many considerations for migrations of workload to the cloud. In order to realize potential cost savings and reduce availability risks, stakeholders from various groups will need to work together to form the appropriate criteria. Characterizations of the performance workloads including their access density, throughput intensity, and availability will need to be considered. Also, the business units will need to inform IT of any data that cannot be moved to the cloud due to regulatory or other business requirements. If for example, the data contains PII data or business critical data it is not a good candidate for the cloud. IntelliMagic has years of experience characterizing workloads and assessing performance and availability requirements.

For an assessment of your workloads to identify potential cloud candidates, please contact us.

This article's author

Share this blog

Related Resources

Book a Demo or Connect With an Expert

Discuss your technical or sales-related questions with our mainframe experts today

Brett Allison

Brett Allison